Kubernetes NodePort vs LoadBalancer vs Ingress

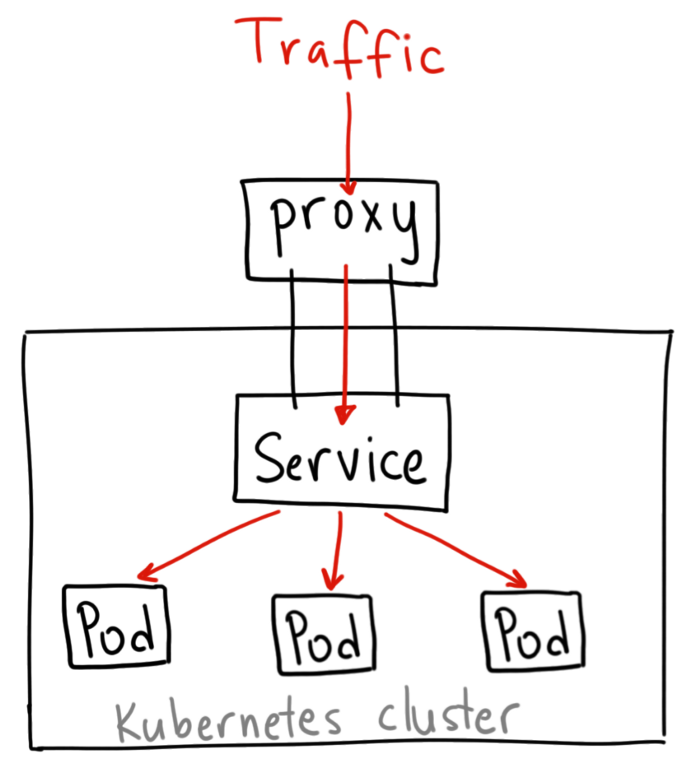

ClusterIP

A ClusterIP service is the default Kubernetes service. It gives you a service inside your cluster that other apps inside your cluster can access. There is no external access.

The YAML for a ClusterIP service looks like this:

apiVersion: v1

kind: Service

metadata:

name: my-internal-service

spec:

selector:

app: my-app

type: ClusterIP

ports:

- name: http

port: 80

targetPort: 80

protocol: TCP

Access it using the Kubernetes proxy:

kubectl proxy --port=8080

It’s possible to navigate through the Kubernetes API to access this service using this scheme:

<http://localhost:8080/api/v1/proxy/namespaces/

To access the service defined above, use the following address:

http://localhost:8080/api/v1/proxy/namespaces/default/services/my-internal-service:http/

When would you use this?

There are a few scenarios where you would use the Kubernetes proxy to access your services.

- Debugging your services, or connecting to them directly from your laptop for some reason

- Allowing internal traffic, displaying internal dashboards, etc.

Because this method requires you to run kubectl as an authenticated user, you should NOT use this to expose your service to the internet or use it for production services.

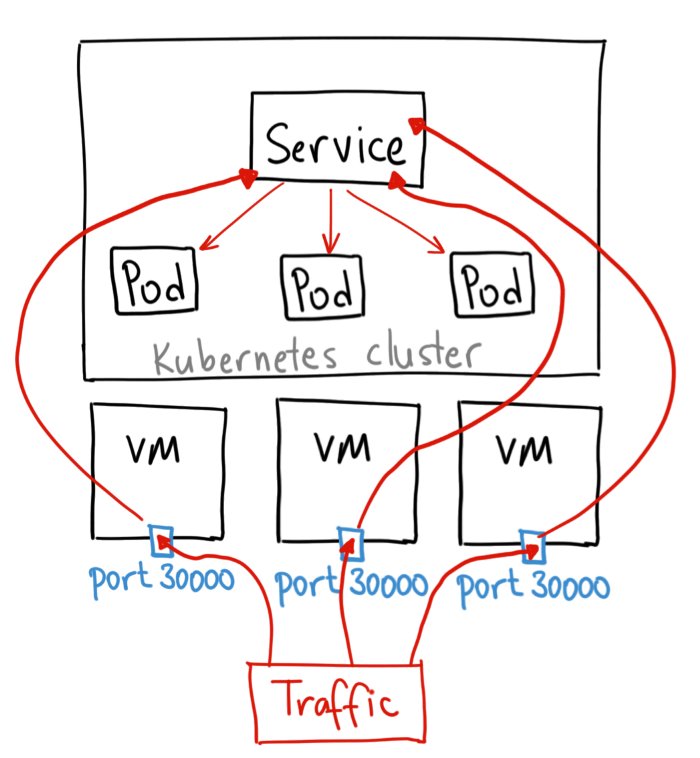

NodePort

A NodePort service is the most primitive way to get external traffic directly to your service. NodePort, as the name implies, opens a specific port on all the Nodes (the VMs), and any traffic that is sent to this port is forwarded to the service.

The YAML for a NodePort service looks like this:

apiVersion: v1

kind: Service

metadata:

name: my-nodeport-service

spec:

selector:

app: my-app

type: NodePort

ports:

- name: http

port: 80

targetPort: 80

nodePort: 30036

protocol: TCP

Basically, a NodePort service has two differences from a normal “ClusterIP” service. First, the type is “NodePort.” There is also an additional port called the nodePort that specifies which port to open on the nodes. If you don’t specify this port, it will pick a random port. Most of the time you should let Kubernetes choose the port.

When would you use this?

There are many downsides to this method:

- You can only have one service per port

- You can only use ports 30000–32767

- If your Node/VM IP address change, you need to deal with that

For these reasons, I don’t recommend using this method in production to directly expose your service. If you are running a service that doesn’t have to be always available, or you are very cost sensitive, this method will work for you. A good example of such an application is a demo app or something temporary.

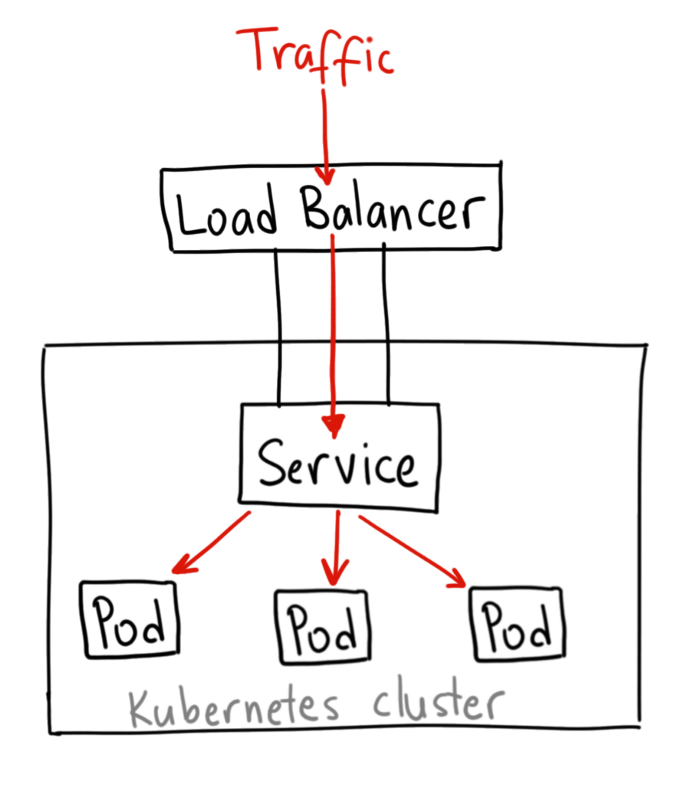

LoadBalancer

A LoadBalancer service is the standard way to expose a service to the internet.

When would you use this?

If you want to directly expose a service, this is the default method. All traffic on the port you specify will be forwarded to the service. There is no filtering, no routing, etc. This means you can send almost any kind of traffic to it, like HTTP, TCP, UDP, Websockets, gRPC, or whatever.

The big downside is that each service you expose with a LoadBalancer will get its own IP address, and you have to pay for a LoadBalancer per exposed service, which can get expensive!

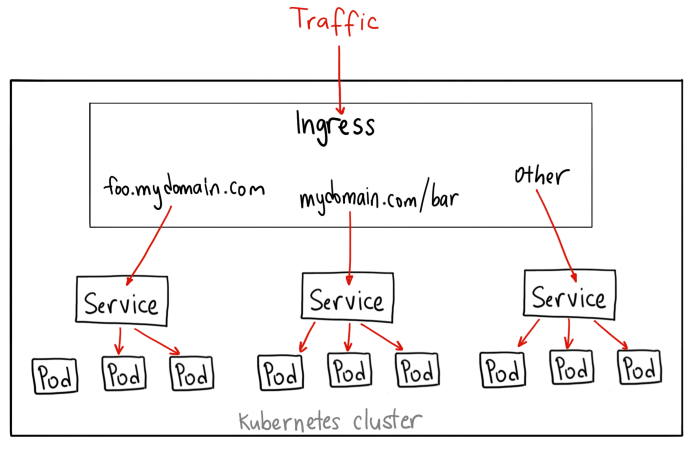

Ingress

Unlike all the above examples, Ingress is actually NOT a type of service. Instead, it sits in front of multiple services and act as a “smart router” or entrypoint into your cluster.

You can do a lot of different things with an Ingress, and there are many types of Ingress controllers that have different capabilities.

The YAML for a Ingress object might look like this:

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: cafe-ingress

spec:

# ingressClassName: nginx # use only with k8s version >= 1.18.0

tls:

- hosts:

- cafe.example.com

rules:

- host: cafe.example.com

http:

paths:

- path: /tea

backend:

serviceName: tea-svc

servicePort: 80

- path: /coffee

backend:

serviceName: coffee-svc

servicePort: 80

- host: technotes.adelerhof.eu

http:

paths:

- path: /

backend:

serviceName: technotes-svc

servicePort: 80

When would you use this?

Ingress is probably the most powerful way to expose your services, but can also be the most complicated. There are many types of Ingress controllers, from the Google Cloud Load Balancer, Nginx, Contour, Istio, and more. There are also plugins for Ingress controllers, like the cert-manager, that can automatically provision SSL certificates for your services.

Ingress is the most useful if you want to expose multiple services under the same IP address, and these services all use the same L7 protocol (typically HTTP). You only pay for one load balancer

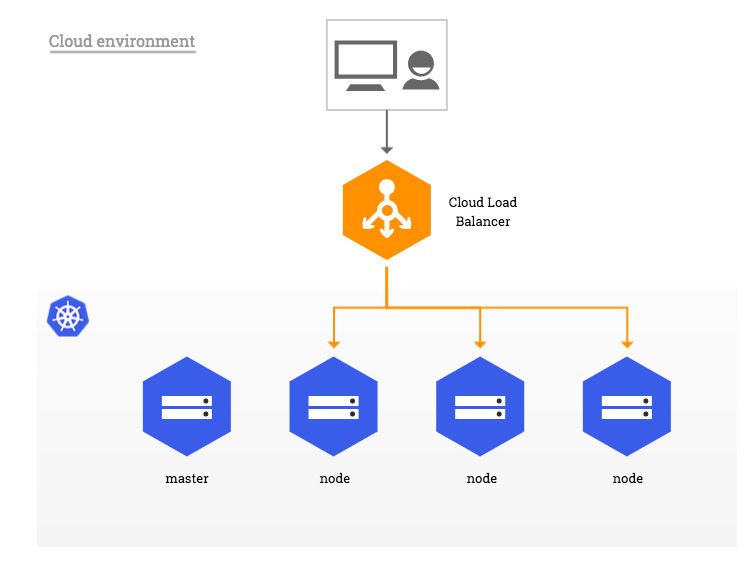

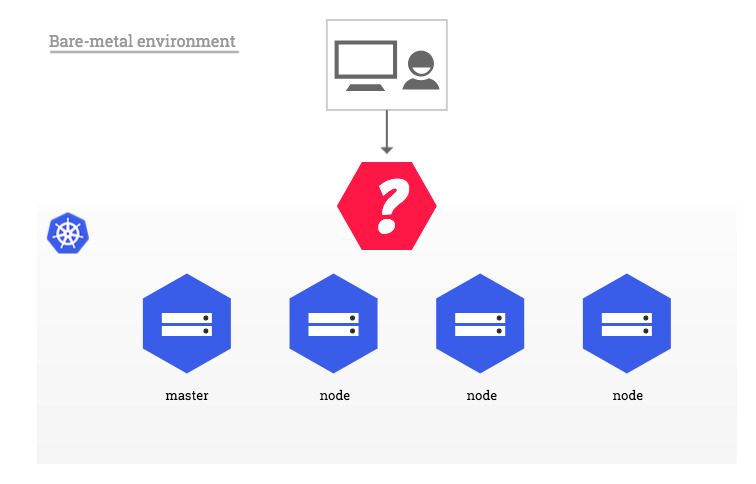

Cloud Environment vs Bare-Metal

In traditional cloud environments, network load balancers are available on-demand to become the single point of contact to the NGINX Ingress controller to external clients and, indirectly, to any application running inside the cluster.

Basically this is a lack for bare-metal clusters. Therefore few recommended approaches exists to bypass this lack (see here: https://kubernetes.github.io/ingress-nginx/images/baremetal)

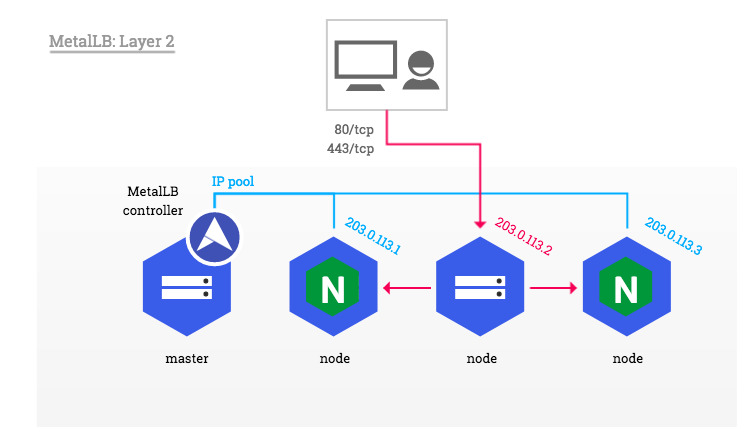

Nginx Ingress Controller combined with MetalLB

In this section we will describe how to use Nginx as an Ingress Controller for our cluster combined with MetalLB which will act as a network load-balancer for all incoming communications.

The principe is simple, we will build our deployment upon ClusterIP service and use MetalLB as a software load balancer as show below:

MetalLB Installation

The official documentation can be found here: https://metallb.universe.tf/

NGINX Ingress Installation

All installation details can be found here: https://docs.nginx.com/nginx-ingress-controller/installation/installation-with-manifests/

Source:

https://medium.com/google-cloud/kubernetes-nodeport-vs-loadbalancer-vs-ingress-when-should-i-use-what-922f010849e0

https://blog.dbi-services.com/setup-an-nginx-ingress-controller-on-kubernetes/

https://kubernetes.github.io/ingress-nginx/deploy/baremetal/